The Boombox: Visual Reconstruction from Acoustic Vibrations

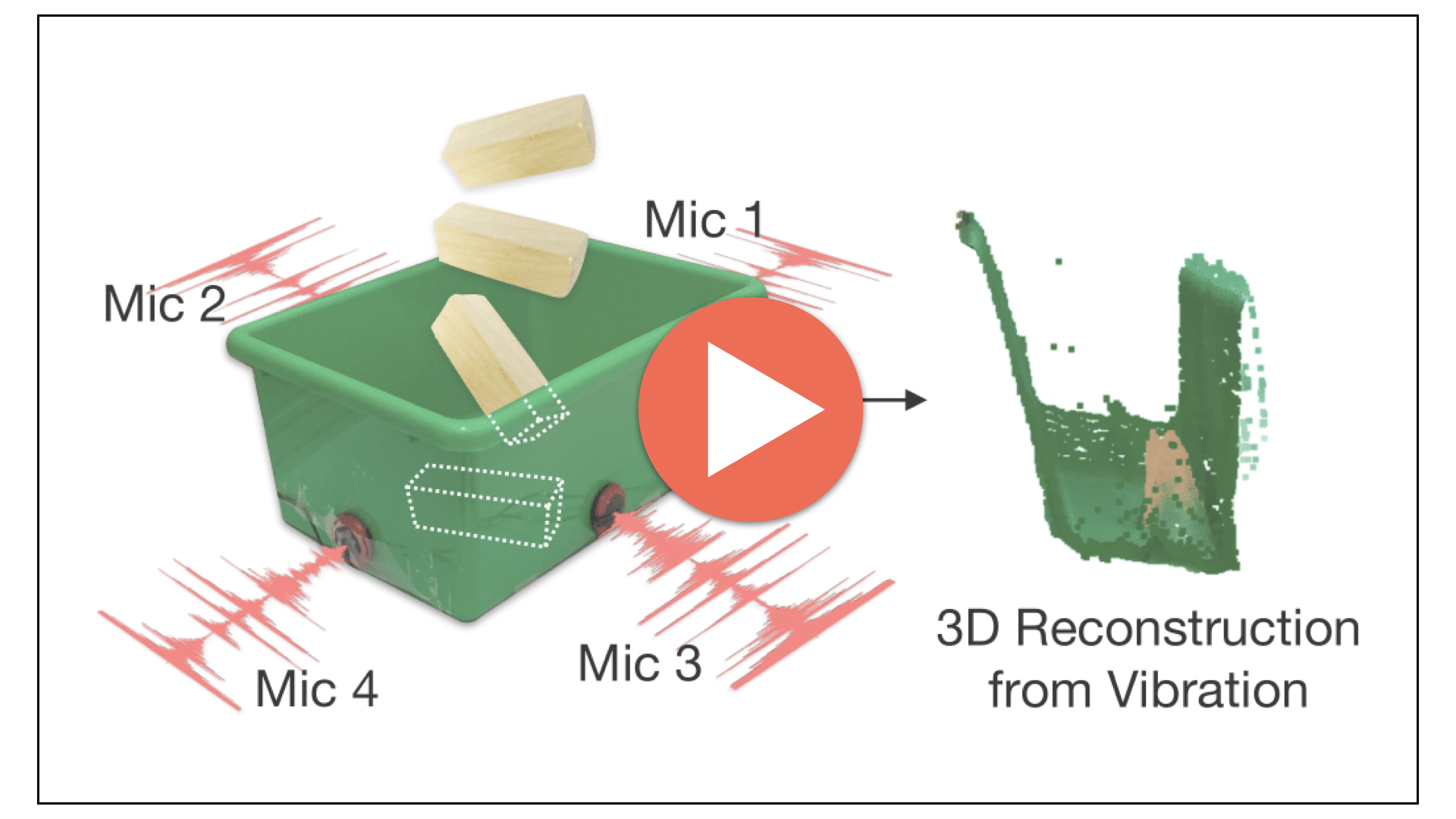

We introduce The Boombox, a container that uses acoustic vibrations to reconstruct an image of its inside contents. When an object interacts with the container, they produce small acoustic vibrations. The exact vibration characteristics depend on the physical properties of the box and the object. We demonstrate how to use this incidental signal in order to predict visual structure. After learning, our approach remains effective even when a camera cannot view inside the box. Although we use low-cost and low-power contact microphones to detect the vibrations, our results show that learning from multi-modal data enables us to transform cheap acoustic sensors into rich visual sensors. Due to the ubiquity of containers, we believe integrating perception capabilities into them will enable new applications in human-computer interaction and robotics.

Video

Paper -- Conference on Robot Learning (CoRL 2021)

Latest version: arXiv:2105.08052 [cs.CV] or here

Code and Dataset

We release the code at Boombox. You can follow the instructions on our GitHub page to use the code and the dataset. If you just want to download the dataset, you can click here (~2G).

Team

Columbia University

Acknowledgements

We thank Philippe Wyder, Dídac Surís, Basile Van Hoorick and Robert Kwiatkowski for helpful feedback. This research is based on work partially supported by NSF NRI Award #1925157, NSF CAREER Award #2046910, DARPA MTO grant L2M Program HR0011-18-2-0020, and an Amazon Research Award. MC is supported by a CAIT Amazon PhD fellowship. We thank NVIDIA for GPU donations. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.

Contact

If you have any questions, please feel free to contact Boyuan Chen